Exploring Generative UI for Automotive Interfaces

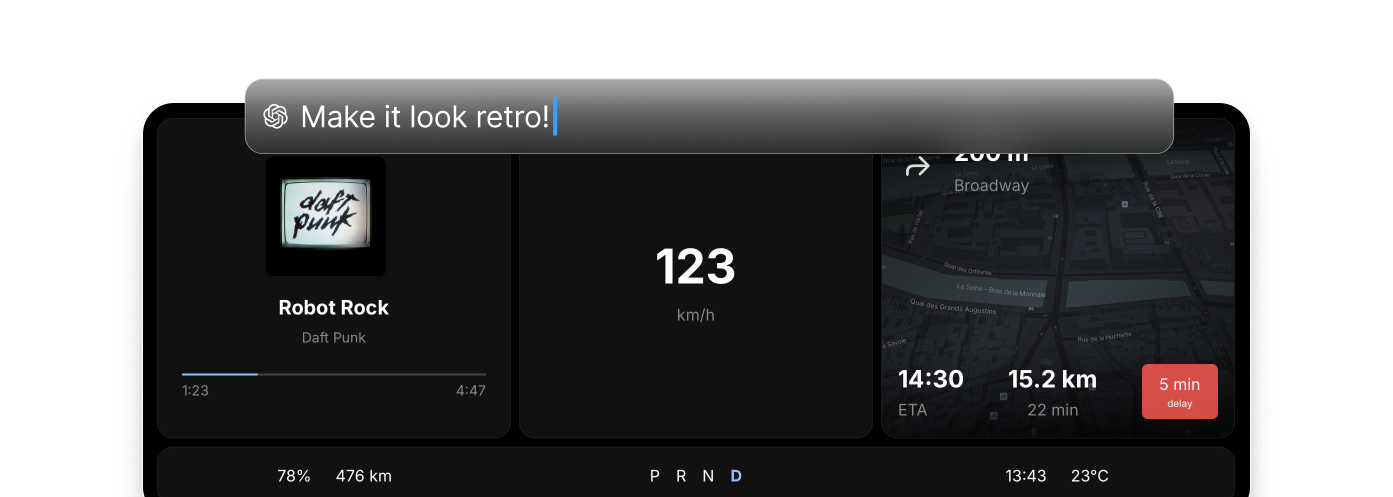

In a weekend exploration, I built a prototype that demonstrates how generative UI could be used in automotive interfaces by creating personalized, on-demand user experiences. Generative user interfaces are interfaces that are created in real-time by an AI, rather than being designed up front. This has the potential to create hyper-individualized interfaces tailored to everyone's specific needs. What happens if we apply this to automotive interfaces? It's impossible to add every requested feature to a car as the interface would become unusable. However, with generative UI, one could ask the car any piece of information, and it would be able to design and build an interface showing exactly what's asked for. There is an opportunity for the carmaker to create a base set of must-have features, and for drivers to ask for anything that is missing. To get a better picture of what it would take and what the state of current LLMs is, I decided to spend a weekend exploring this through a side-project focused on generative UI in the context of automotive interfaces.

What I explored

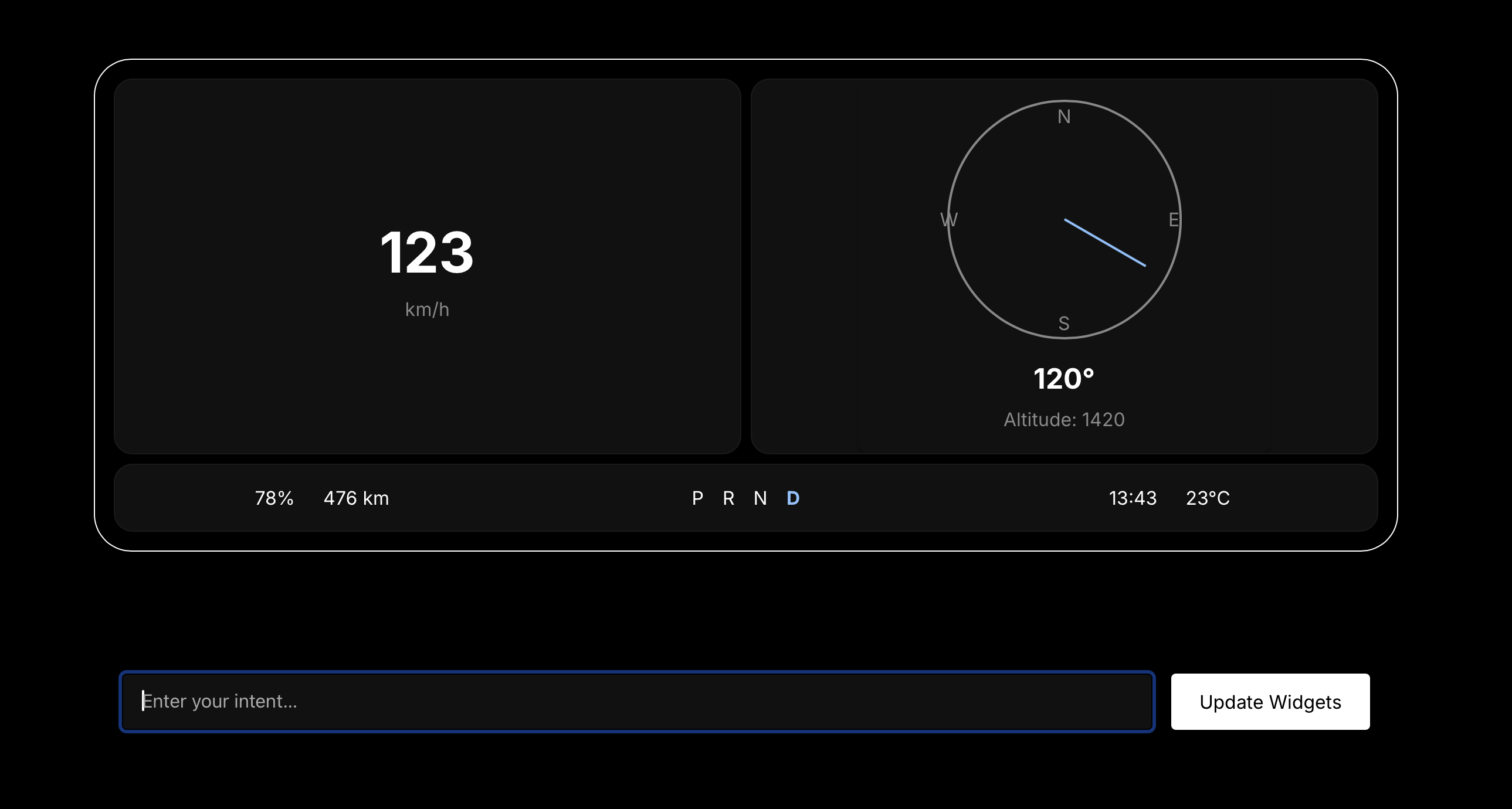

To keep the project small and contained, I chose to apply generative UI to the instrument cluster. I selected this area because it offers a constrained visual space with clear safety requirements, making it ideal for testing AI-generated interfaces. Typically, instrument clusters have a couple of pre-defined views—consumption graphs, trip info, or navigation details. However, a car has much more data available, and theoretically, drivers should be able to request specific information through natural language. For example: "Can you create a graph of my consumption over the last 5 kilometers compared to the average?" Or "Can you show me the rain forecast on top of the map?" Or "Can you give it a classic car look, or a Formula 1-inspired design?" Let's see if it's possible!

My process

First setup

I started by creating a prototype instrument cluster in React, designing it around a 'widgets' system. The layout supports either one large widget, two medium ones, or three small ones.

One challenge I anticipated was that the LLM would need design guidelines—without them, the AI would create UI that looked completely inconsistent with the rest of the system. To address this, I created a 'theme' file with basic CSS style definitions that the LLM could use. I also set up a mock database of vehicle data that the AI could access to populate the features. I chose ChatGPT 4o-Mini for its balance of cost-effectiveness and speed, then created system prompts that would allow the model to generate UI components. Very quickly, I had a basic setup with a chat interface that queried ChatGPT to create widgets. As I predicted, the LLM excelled at writing code but struggled with design. Many UI elements it generated were non-functional or broken, and most lacked creativity.

Second iteration

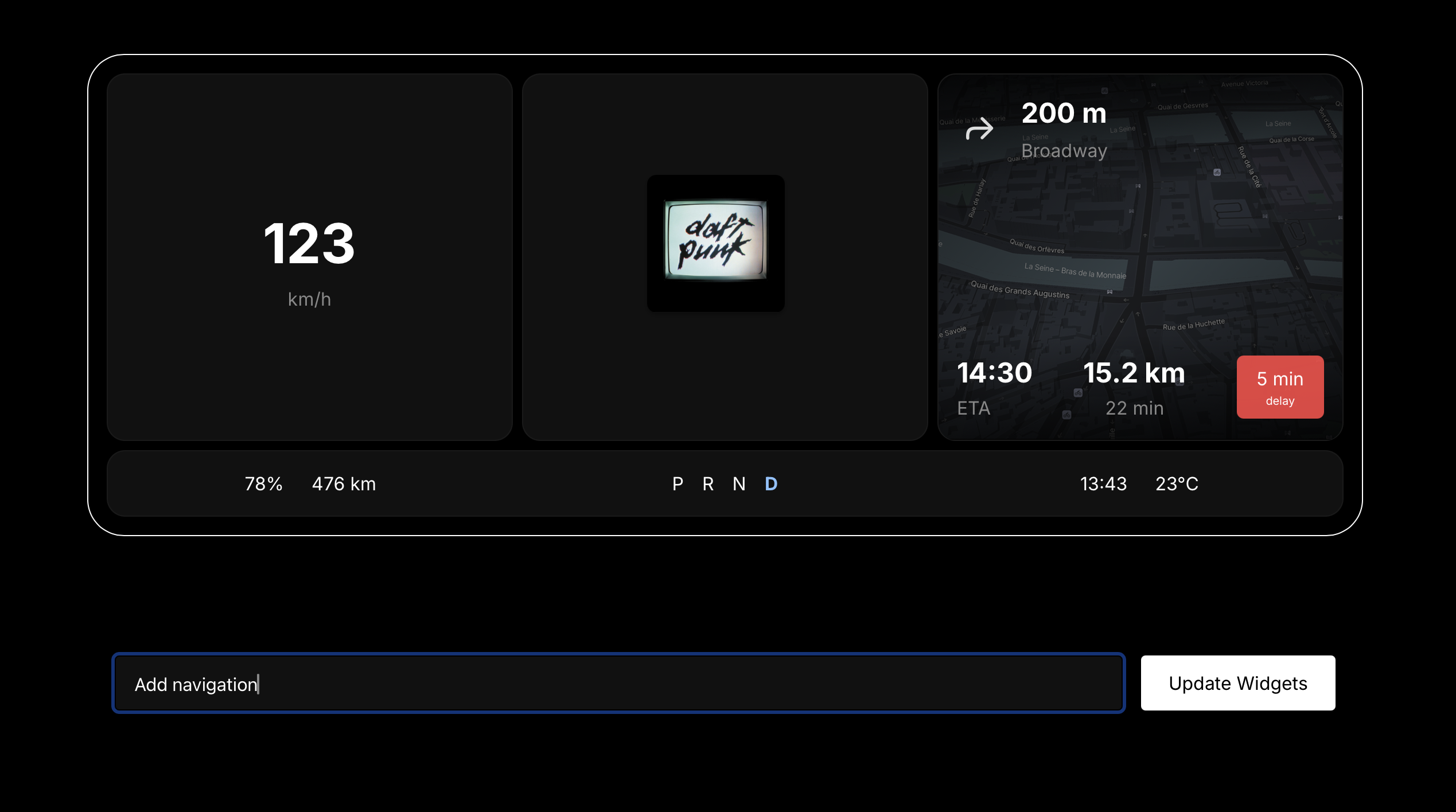

Basic font and color definitions weren't enough to get ChatGPT to create functional instrument cluster widgets. So I fundamentally changed the app's architecture. For the most common widgets—music and navigation—I decided to design and code them myself, while allowing ChatGPT to modify them when needed. Here's how it works: When users type commands like "Add a weather widget" or "Show only the artist name for music," ChatGPT receives this intent along with the current dashboard state. It then translates these instructions into clear, actionable steps stored in a JSON file:

- Remove: remove a widget (except for the mandatory SpeedWidget)

- Reorder: Change the order of widgets -

- Modify: Change the design of an existing widget (e.g., "show only artist name")

- Reorder: Change the order of widgets

- Add: Add a new widget to the grid. If requesting an existing template, it adds the pre-designed component. If requesting a new feature, a separate ChatGPT API call generates a widget from scratch

This is a quick overview of the system with the pre-designed widgets:

My main iterations focused on improving the ChatGPT prompts. Getting it to return specific commands while allowing for creativity when needed proved tricky, but the end result is promising. For example, I can ask to add my music, and it will add the pre-designed template:

And then I can ask to modify it to only show the album art:

It doesn't always get it right. For example, I can ask to add the map widget:

But then if I ask to only show the next direction and traffic info, ChatGPT transforms it into an 'interesting' design:

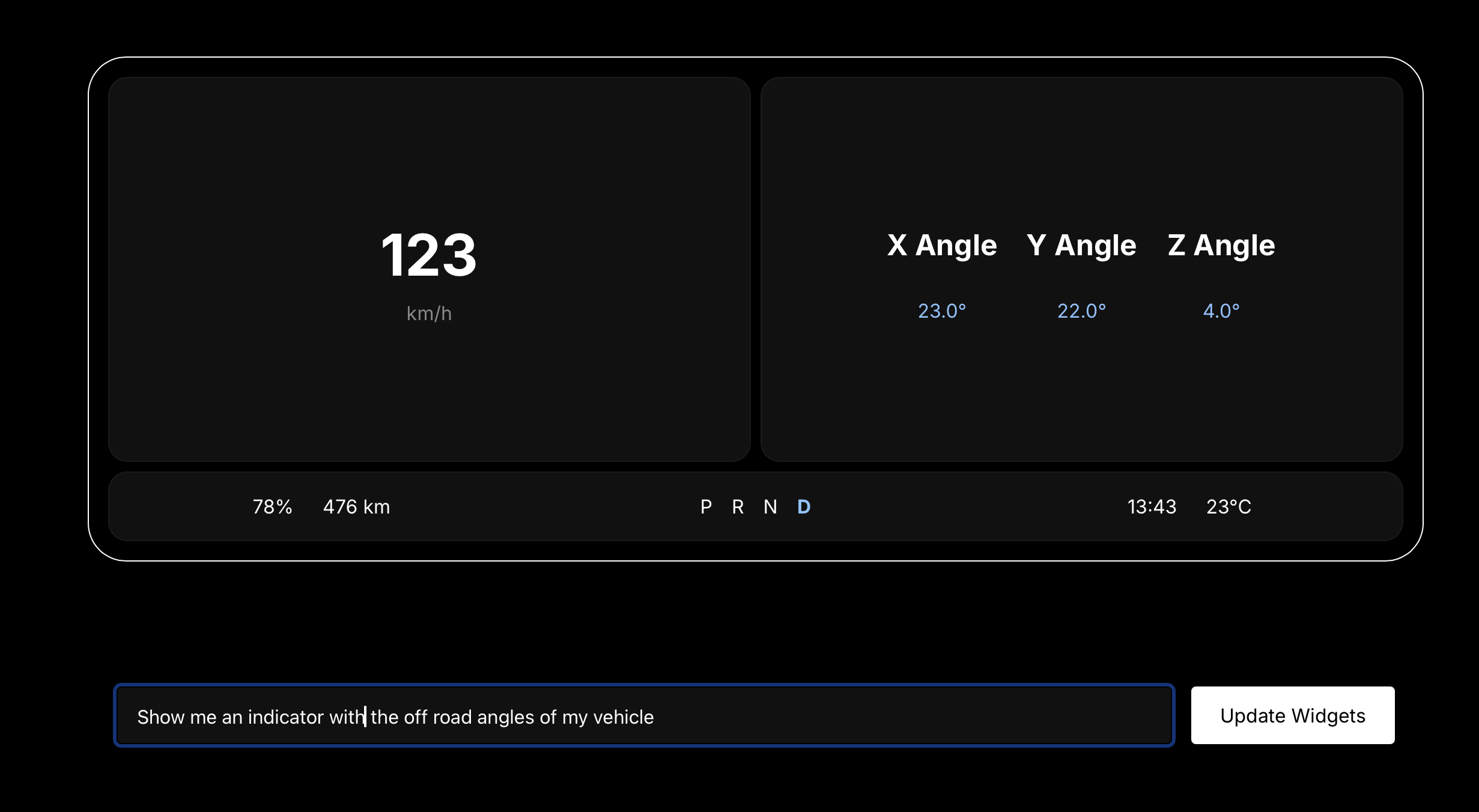

Similarly, when asking to generate completely new widgets, results are hit and miss. Sometimes the UI is too basic:

More often, the UI is broken:

You can see that it struggles to make components look and function properly. I tried several prompt iterations and different ChatGPT models. Results improved slightly when switching from 4o-mini to o4-mini, but issues persisted, like these labels being covered by the circle:

These errors can usually be fixed by asking ChatGPT directly to correct them, but it should work correctly the first time.

I haven't yet managed to get it to reliably generate functioning UI components. My next direction will be to create a small design system with pre-built components like gauges and graphs that the LLM can use as building blocks.

Visual theming exploration

While building this prototype, I realized this setup isn't only relevant for features, but also for visual design. This opened up an interesting possibility: co-creating custom UI themes with ChatGPT. I added a list of 6 different fonts and expanded the 'modify' action to include theming values, making it possible to update colors dynamically. Whenever a prompt references visual appearance, ChatGPT generates a color scheme applied to all UI elements and matches the prompt to one of the six fonts.

Key learnings & next steps

This weekend exploration served as a technical design proof-of-concept. Generative UI shows promise for automotive interfaces, but several challenges emerged:

What worked

- The hybrid approach (pre-designed templates + AI modifications) proved more reliable than pure AI generation

- Natural language commands for layout changes worked surprisingly well

- Dynamic theming opened up interesting personalization possibilities

What I'd do differently

- Start with a comprehensive design system rather than basic CSS guidelines

- Focus on voice accessibility from the beginning, considering hands-free operation while driving

- Test with more diverse user commands to identify edge cases earlier

Next steps:

- Build a component library with gauges, graphs, and other automotive-specific UI elements

- Explore prompt engineering techniques to improve AI-generated component reliability

- Consider safety implications and fail-safes for mission-critical information display

The question of whether this should be added to vehicles wasn't what I set out to answer, but my project did get me thinking. Being able to access any information in your preferred format while keeping your hands on the wheel is powerful. Moreover, being able to personalize the visual design to exactly match your preferences could be a compelling feature for certain carmakers. However, the non-deterministic nature of LLMs remains a significant challenge. The instrument cluster, with its safety-critical purpose, isn't the ideal place to embed generative UI without robust safeguards. Future explorations should focus on infotainment systems or secondary displays where errors are less consequential.